The Art of De-Googling

I recently decided to replace all my major Google services with self-hosted FOSS alternatives. Why? Well, it's clear that even Google knows they're evil and I am ready to reclaim my data! Plus homelab setups are just really fun. In this post, I'll explain my personal approach to de-googling my life.

Design Factors

For many of us, google stores our entire lives and when you buy into their enterprise systems, you get a bunch of features. I want my ecosystem to share many of those features. As such, I decided immediately that this project must adhere to these core principles:

- Data redundancy is critical

- Zero-trust with backups

- Everything should Just Work™

Redundancy means the data should be well backed up and zero-trust means the off-site storage provider can't access the original content. Furthermore, I'd really like the system to just work. I don't want to remember bespoke commands or processes every time I need to access a service. It may sound like a lot, but as long as we keep these constraints in mind we can be thoughtful when choosing services.

Equipment

My design only requires a few components.

- An on-prem server with docker

- An internet-connected VPS

- A RAID-1 enclosure with some HDDs (optional)

I already had a server lying around with plenty of disk space, but I opted to buy a 2-bay RAID enclosure with 2x 8TB hard drives just for fun. This was utterly overkill because of the backup scheme I settled on, but it provides that sweet sweet sense of safety and lets me cosplay as an enterprise operation.

For the internet-connected VPS, I chose Hetzner as my provider because their pricing is cheaper than AWS and very transparent. This server is used to host the headscale command node, allowing all my devices to connect to the private network from the internet.

Network Overview

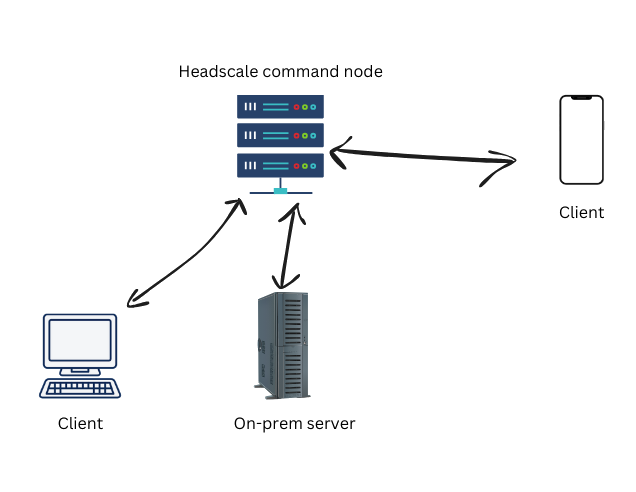

A public-facing jump box will host a headscale control server. My on-prem server, mobile devices, and personal computers will all be onboarded to the tailnet allowing everything to chat from anywhere in the world. The on-prem server will run Debian with docker, serving all the Google replacements and everything will be backed up to some remote object storage.

Headscale Command Node

The thing about Google is that it's always there. Do you want to access memories on the go? Sure. Need to add a new 2FA key while at work? Absolutely. There are a lot of services I may want to host that will require access "from the field". That leaves me with two options:

- I could allow external traffic to hit my on-prem server, giving me worldwide access.

- I could set up a VPN to allow secure tunneling into my network.

Immediately it was clear that I needed to set up a VPN. I've played the self-hosted server game before and... no thanks. I don't want to open my network to bots or figure out how to convince my ISP to provide a static IP address. Furthermore, I don't trust myself to secure a network better than an off-the-shelf solution might.

Meet Tailscale! In their own words, Tailscale is a "VPN that just works—securely connecting users, services, and devices without the complexity of traditional setups." and, hey, that's exactly what I want!

But you see, Tailscale is an enterprise solution. Yes, they have a free tier but the constraints bother me, and more importantly, I can't verify anything about how they store the encryption keys. Scary. After some light research I stumbled upon Headscale which is a FOSS implementation of the tailscale command node. I like this a lot more because the encryption keys stay with me and all the proprietary limitations are lifted.

I opted to provision a very basic $4.99 USD Hetzner VPS in a region close to where I live and installed headscale on that server. Its entire purpose is to allow my devices to talk to each other.

With Hetzner, there's no default firewall so I configured a basic one that allows port 80/443/22 for http/https/ssh traffic. It's worth mentioning: they do charge for traffic thruput. At the time of this writing, you get 1TB by default and as long as you aren't doing anything crazy that limit should be fine. You can select different instances for different thruputs.

On-Prem Server

The server in my living room isn't particularly beefy, but it gets the job done.

- AMD Ryzen 9 3900X

- 64GB Ram

- 8TB Raid 1 array

The most important feature is that it runs Docker. Referring to my earlier design constraint "data redundancy is critical", I wanted to ensure

creating (and restoring) backups would be a trivial process. The easiest solution with my skillset is just hosting docker containers

with the -v flag pointing to a folder.

Backups become a simple matter of zipping that folder and uploading it somewhere. Very easy to reason about!

Application Selection

Since I can only run docker images for my services, that limits my options a bit. I ended up going with the following suite of tools:

- Immich to replace google photos

- Cryptpad to replace google docs

- Radicale to replace google calendar

- Dokuwiki to doucument my processes and procedures

- Searxng to replace google search

Each of these applications can run in a docker container and provide a surprising amount of parity with the Google services I am used to. If you are looking for even more stuff, this amazing list proved to be an invaluable resource: https://github.com/awesome-selfhosted/awesome-selfhosted

Routing the Services

I used subdomains for my private cloud services. Headscale seems to assign "permanent" IP addresses to the clients, which is great! So assigning memorable names became a simple matter of configuring the DNS settings. Here's an example of what I landed on:

A node.joshcole.dev # Main tailnet IP address for your server

CNAME docs.joshcole.dev # Points to node.joshcole.dev

CNAME photos.joshcole.dev # Points to node.joshcole.dev

CNAME calendar.joshcole.dev # Points to node.joshcole.dev

Reverse Proxy

After configuring the DNS, I needed a way to actually route the traffic on the server. I'm familiar with nginx and have used it in the past as an SSL proxy. So that's what I did here too. This allowed me to leverage https://, friendly names, and abstracting the ports of my services.

Here's an example configuration file:

server {

server_name wiki.joshcole.dev;

listen 443 ssl;

location / {

proxy_pass http://127.0.0.1:1543;

}

# SSL Configuration

ssl_certificate /etc/ssl/certs/nginx.sh.crt;

ssl_certificate_key /etc/ssl/private/nginx.sh.key;

}

server {

server_name wiki.joshcole.dev;

listen 80;

return 301 https://$server_name$request_uri;

}

I copied and pasted this file for each subdomain I wanted to support (mainly changing the

server_name directive for each file). And everything just worked as expected.

Encrypted Backups

If I just ended my project here, I'd be fully de-googled. Yay! But referring back to my project goal "data redundancy is critical", this system is pretty susceptible to a few failure modes:

- Disk failure

- Environmental catastrophe

- Accidental deletion

I need off-site backups. And furthermore, I don't want to trust any "Object Storage" provider with my data.

So here's the plan: I'll set up a cron job that tars my docker volume on a daily, weekly, monthly, and yearly cadence and uploads the result to S3. That's 4 copies of my data (yikes). I know that I should look at incremental backups. But data is cheap and I value the concept of simplicity over efficiency here.

In the event of a failure condition, I don't want to futz with incremental backups. I just want to unzip a file and call it a day.

Before shipping off the compressed tar files, I encrypt them client-side using PGP. I'm not really a fan of PGP but the command line utility was easy to use so I went with it. This ensures that the encryption keys are never accessible by the cloud providers (as long as I don't leak them).

Where to store the encryption keys?

There's a whole science to storing encryption keys, and I am not an authority on this subject. Maybe you could password-protect them and then upload that encryptception to object storage? I myself bought a thumb drive and put it in a fireproof box. Maybe I'll stash a second set at my friend's house. You could even print them on paper.

Whatever you go with, make sure to research thoroughly the pros and cons. And please document the restoration process for "future you"!

Conclusion

In summary, I set up a VPN, only chose docker-based services so backup was easy, encrypted all backups client-side, and did some basic DNS configurations so it's easy to remember where everything is.

After a few days of use, the only change I'd make is to find a way to separate generic document storage from cryptpad because ultimately Cryptpad is great for collaboration, and that means it should be hosted in a more public location. Otherwise, I think this is a great initial setup and provides an excellent platform for further homelab experimenting.

Links

Here are some links to the resources mentioned in this article